Multipitch Estimation

The task of detecting the fundamental frequencies of concurrent musical instruments at some particular times from within an input recording is referred as multipitch estimation. It represents an important stage in Music Information Retrival (MIR) and several different algorithms have been developed in the last few decades. Clustering these fundamental frequency estimates into trajectories, corresponding to each individual instrument in the mixture, is usually called multipitch streaming, which is even more challenging than just multipitch estimation.

Joint Estimation

Many existing algorithms usually perform an analysis of the time-frequency representation of the input signal in order to detect different levels of periodicity in every single frame. A good example of this type of process is the one proposed by Duan et. al. in 2010.

The principal limitation of this type of system is that the estimates are calculated based on one single observation of the original input signal. If there is a very strong note being played close to another weaker one, it is very likely that the stronger will mask the weaker, or at least part of it.

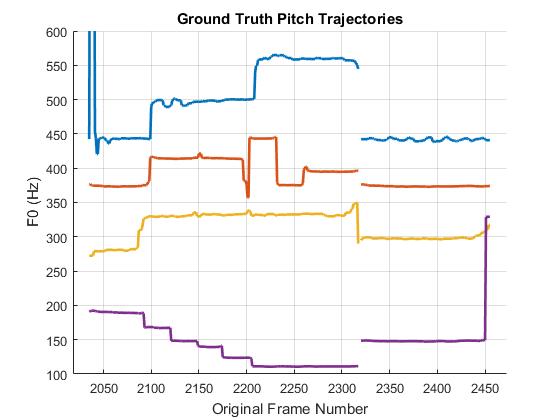

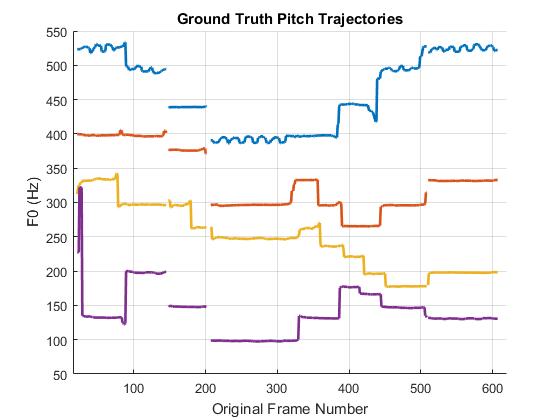

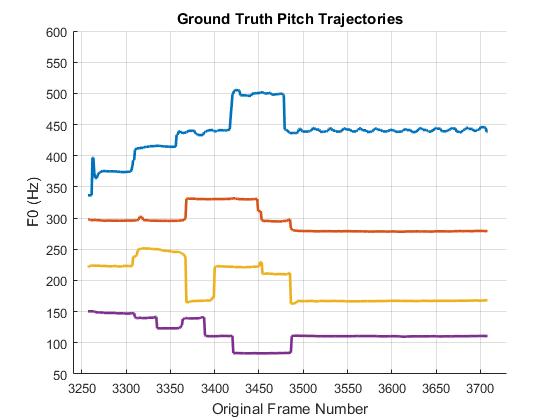

As an example consider a mixture with polyphony four, with ground truth pitch trajectories presented in the figure above. Four instruments are playing simultaneously: violin (blue), clarinet (red), saxophone (yellow) and bassoon (purple). Some of the notes are in octave relation, but also, they are being played at different levels of intensity. Have a look at the final four notes, the long ones, you'll notice something interesting just in a minute.

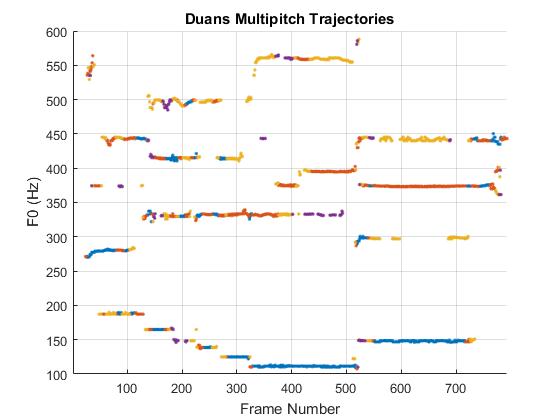

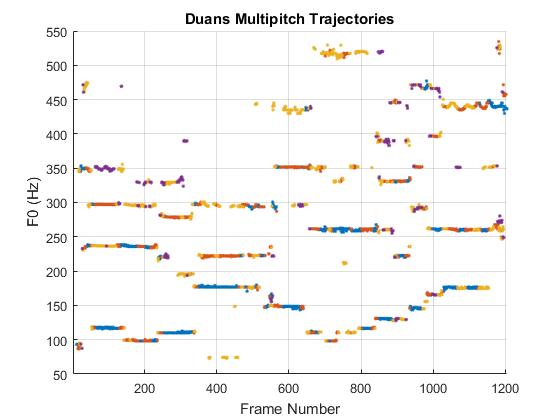

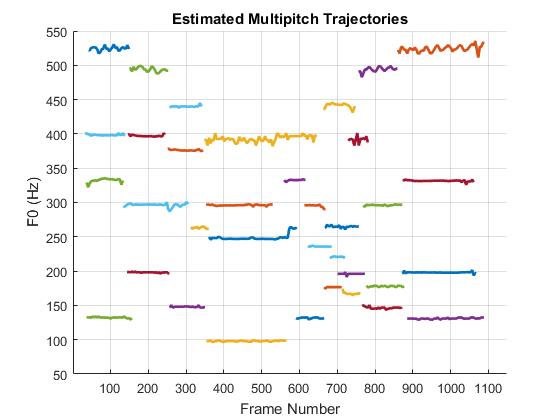

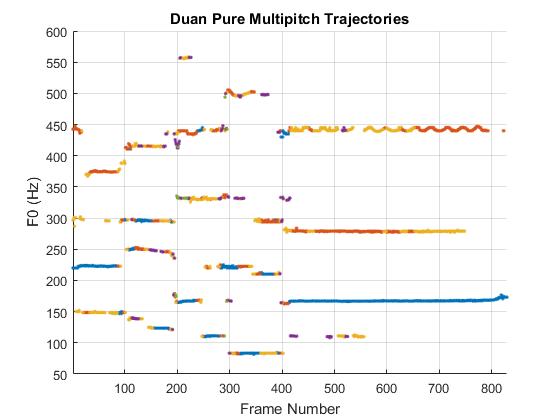

When Duan's multipitch estimator is applied to this audio mixture, a set of pitch estimates is generated according to a probabilistic process. The figure on the right shows how they look like when they are plotted.

As you can see, the estimates look quite accurate but something has happened with the final four notes, a good section of the lower one is missing due to masking. A similar situation can be seen just at the beginning of the excerpt, where most of the note at 300 Hz was not properly detected.

From the figure above is is also important to notice that the estimates are not organised into continuous trajectories, they are just individual pitches occuring at some particular times, but as this is not pitch streaming, that is fine.

An Iterative Note Event-based Approach

In order to overcome some of the limitiation described in the previous section, we proposed an iterative note event-based multipitch estimation in which the final set of pitch estimates are detected in sections. A note event is basically a continuous section of pitch estimates having similar fundamental frequencies and intensities; they might correspond to one single musical note or several notes with similar pitches.

The process starts by using Duan's algorithm to generate the initial set of pitch estimates in every frame, which are then further analysed to measure their intensity or salience. The estimates are then organised into note event candidates and the predominant one is selected based on its total salience.

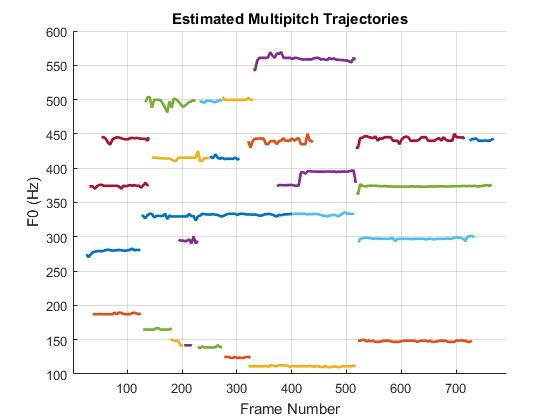

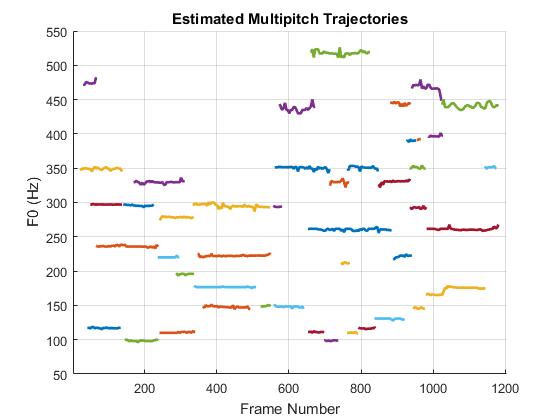

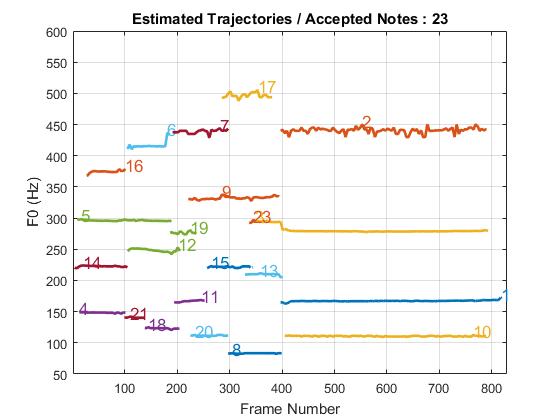

The predominant note event is reconstructed and most of its energy is removed from the original input by subtracting it from the original mixture in the time domain. The residual of this subtraction is then used as an input in the next iteration of the process. This cycle ends when a maximum number of iterations is reached or when the energy of the residual is below a predefined threshold. The figure on the right shows the results.

This new process is not only revealing notes that were previously masked by other sounds, but also it represents each of the notes as a continuous segment of estimates, bringing a new intermediate level in between multipitch estimation and multipitch streaming.

In this example, the accuracy of the proposed method has been measured using the standard F-Score, obtaining 89.23% of accuracy, whilst Duan's original process reached 71.37%.

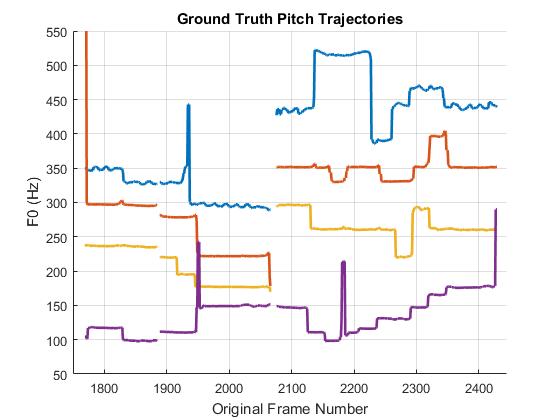

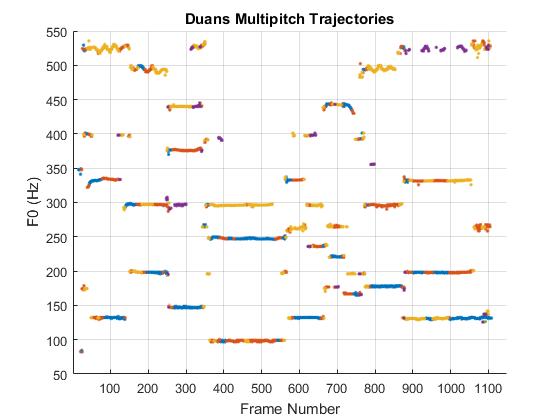

Additional Examples in Polyphony Four

The following graphs show additional examples of multipitch detection in audio sections with polyphony four. The original excerpts were taken from the Bach10 Database, also proposed by Duan et. al.