Separation of Lead Instruments and Lead Vocals

Analysing commercial recordings is always a significant challenge, usually because of the complexities involved in the mixing process normally used to create them. Among the many components that can be present in such an audio signal, we usually have a lead instrument or singing voice that attracts most of the attention of our brain. Hence, being able to isolate this source from the rest of the mixture could be really useful.

Example 1 - Lead Instrument

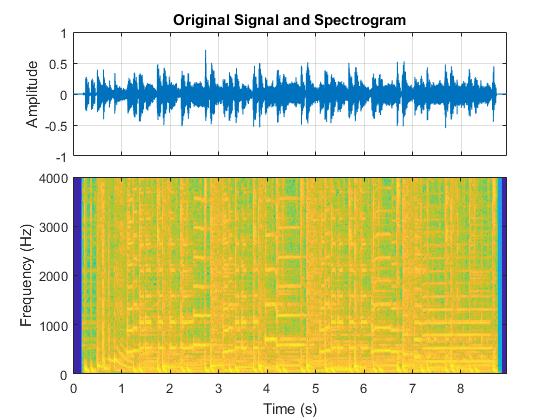

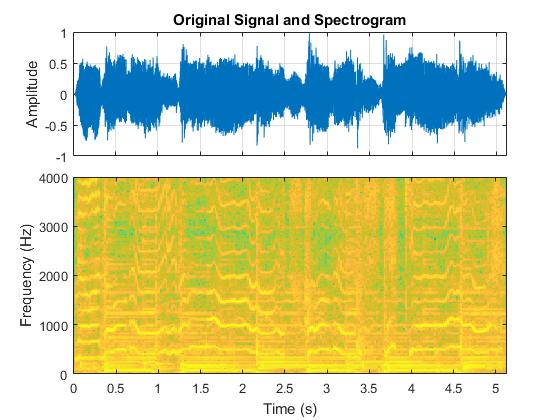

Let's imagine we have a commercial mono recording comprising several sources, including harmonic and precussive instruments. Within the mixture, there is a trumpet playing the main melody, therefore it is considered the lead instrument. The original signal in the time domain is shown on the right, along with its spectrogram. The audio excerpt used here was taken from a song called The African Breeze by Hugh Masekela. Use the controls below to listen to it.

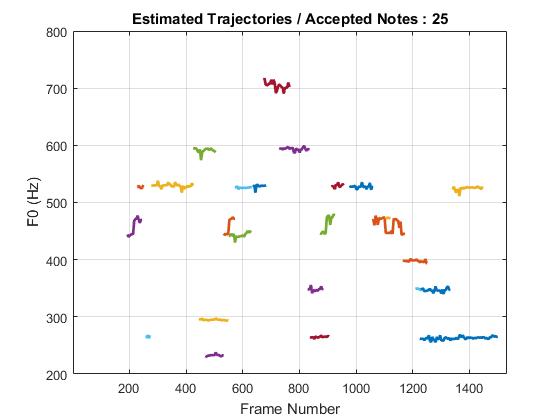

The iterative estimation/separation method is applied in order to obtain separated note events with fundamental frequencies above 200 Hz and below 1300 Hz. In this case, the algorithm was instructed to extract 25 note events from the mixture. On the right, the final set of detected pitch trajectories is presented.

Keep in mind that every line on the graph has a note event associated to it, and to reconstruct the separated trumpet we just have to select the note events that actually contain some of the trumpet sound.

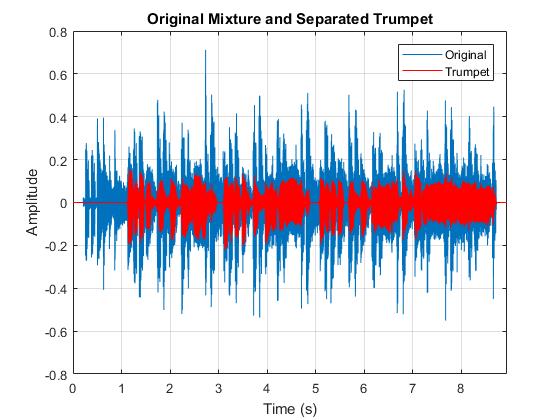

Finally, 18 of the note events were used to obtain the reassembled lead trumpet while the rest were discarted. The figure on the right shows a comparisson between the reconstructed lead trumpet and the original mixture.

Now, by subtracting the estimated source from the original mixture, we can remove most of the trumpet sound and get a good approximation to the accompaniment. The following controls will help you listening to both, the separated trumpet and the estimated accompaniment.

Example 2 - Lead Vocals

A similar approach can be used to estimate the lead vocals from a commercial recording. In this second example we will used an excerpt from the song We Are Young by Savannah Outer (originally by Fun). We will assume the recording comes in mono format. As usual, the time domain signal and its spectrogram are presented on the right. The controls below lead to the original audio track.

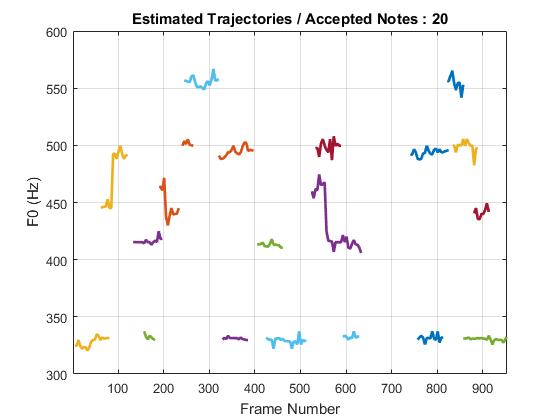

In this case, the estimation of note events was restricted to all fundamental frequencies above 300 Hz and below 1300 Hz, in order to avoid taking other sources playing at lower frequencies, whilst the algorithm was instructed to extract a total of 20 events. The figure on the right shows the final set of estimated pitch trajectories.

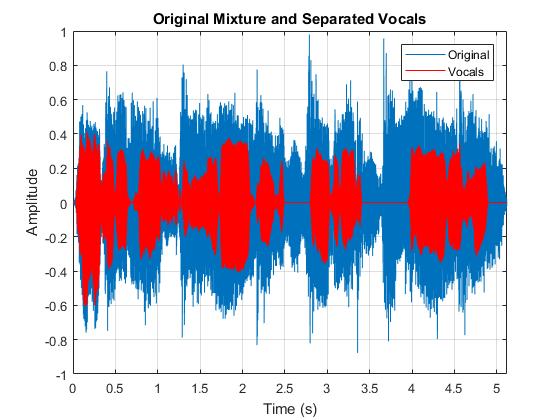

The lead vocals were reconstructed using 17 note events. Listening to the results you will notice that two short notes are missing, mostly because of their short duration and weak harmonic content, but also because they are being heavily masked by other sources. The figure on the right compares the reconstructed vocals and the original mixture. Use the following controls to access the separated vocals.